6 practical AI tools I used last week

And how they show you the future normal of work

AI is moving too fast for anyone to keep up with. Which is why sometimes it’s good to get away from the theoretical and focus on the deeply practical.

How am I using AI, personally? I'm not going to waste your time sharing an AI-generated summary of the tools everyone knows and uses. Yes I used Claude and ChatGPT to review and refine this draft. I used Canva for some of the images. I used Perplexity for research (although my usage is falling fast now ChatGPT seems as reliable and more aware of what I’m after). Like most of you, my digital footprint now skews heavily towards AI tools. I barely Google anything any more.

But this week I want to share six less well known tools and features which might be new to some of you, but which have transformed my working life:

I’ll share details of exactly how I use these tools. But the bigger idea underpinning this newsletter is that today’s innovations help you spot tomorrow’s biggest trends and opportunities. So I’ll also share how each tool gives you a glimpse of the future normal of AI-augmented work.

In an exponential world, keeping up is a team sport. So I'd love to hear from you - which tools / use cases have transformed your work recently?

Share them in the comments, or DM me. I'll send a copy of The Future Normal to any that I feature in next week's edition.

Before we get into it: a note from our sponsor (me!). Many readers here discover me via my keynotes. If you’re planning your next strategy session, sales kick off, or customer conference – 🚨 I’d love to help.🚨

Typically I inspire clients with:

Your Future Normal: a trend keynote, bringing you non-obvious yet relevant and practical insights from outside your industry.

VisuAIse Futures: anew interactive, ‘multiplayer’ AI-powered creative experience. Watch the 2-min highlight video.

Read more about these at the bottom of this email, or reach out to Renee Strom on renee@ideapress-speakers.com if you’d like to bring me to your next event.

And if I’m not a good fit, then do check out the best speakers I saw in 2024.

Granola and augmented attention

There are a bunch of AI meeting assistants such as Otter, Fireflies, Zoom. But then Granola came into my life, and I now can't imagine living without its bullet pointed, well-structured meeting summaries.

Why it matters? // It’s not easy for an AI product to cross the chasm and go from 'meh' to magical. Fall short and, like too many AI products today, you leave users underwhelmed. Get it right, and you'll unleash an evangelist who will shout about your product from the rooftops (like I'm doing here!).1

Where next? // There’s currently a gap between AI-augmented online meetings, and real world interactions. I'm excited for my Limitless pendant to arrive, which promises to bridge this gap.

Infinite, perfect memory (some might call it Total Recall ;) is a polarising concept. Beyond the cultural questions around consent and privacy, there are big and very valid questions about what we will lose when we outsource our second brains to AI. My view? Note taking will become like weightlifting, a behaviour that you have to consciously lean into to get its benefits.

Wispr Flow and the shift to voice

Wispr Flow might be the app that's driving the biggest change in how I use my computer in the last 20 years. Now I find myself dictating the vast majority of my shorter, more ephemeral emails and WhatsApp messages.2

Why it matters? Voice input is an interesting beast, because it’s exponentially better in certain contexts, and exponentially worse in others. I felt like a dinosaur watching my kids default to voice search on our Amazon Fire Stick. Voice makes perfect sense there, because it’s clunky to use the remote to browse thousands of options spread across Netflix, Prime, Disney, BBC, and more. But don’t get me started on the ‘promise’ of voice-enabled shopping, which Alexa spontaneously attempts on my behalf all too frequently.

Similarly, I love using ChatGPT’s dictation feature (it’s much better than the iPhone’s native voice dictation feature), to ramble for a few minutes as I start exploring an idea. But I find it hard to dictate longer thought pieces– there's something about the slower pace of typing that gives me time to think through ideas as I write them.

Where next? // Some of you might have seen Sesame's AI viral recent voice demo. If you haven't, then I’d highly recommend trying it out for yourself (it’s free and open, no sign up required!). Check out a short conversation I had with it recently:

Sesame’s demo gets us closer to the zero-latency experience imagined in Spike Jonze’s Her. The promise of two-way voice interaction is profound – moving our relationship with technology from one that’s directive and functional, to one that’s more exploratory, and authentically emotionally engaging.

This has been a long time coming – I’ve been writing about Virtual Companionship in various iterations for nearly a decade now! But it’s a shift that will have huge social and cultural implications. As McLuhan said, “we shape our tools, then they shape us”.

One thing is certain – I’ll keep watching, listening, and increasingly, talking.

Boardy and AI-facilitated human connection

Talking of connections – I’ve written at length about Boardy before, but it remains one of the most exciting practical use cases of AI that I've experienced.

You have a 5-minute phone call with an Australian-accented AI. It asks what you're working on and the types of people you’d like to meet. It then digs into its network and if it finds someone suitable, it sends both parties a double opt-in mail before making the connection.

Why it matters? // The common thread in all my writing is that the most successful innovations are those that serve humans. Boardy is brilliantly ambitious, and couldn’t have been done before AI. But it taps into a long-standing professional need – to meet relevant new people. And, like Granola, it just works. I've connected with three new interesting people in the past month, as well as reconnecting with someone I already knew (which I guess is testament to its matching engine!). Check it out here.

NotebookLM and accessible YouTube summaries

I’ve played around with NotebookLM before, creating AI-generated mini podcast discussions from workshop outputs (another very cool use case!).

I’ve also recently started using it to help extract information from YouTube videos. You can drop in a link and ask it for a summary of the key points in a format that's much more accessible and easy to read than the transcripts.

Why it matters? // Somewhat ironically, given my evangelising of the voice tools above, I'm not a huge fan of the shift to video content. I find it a very inefficient way to consume information. You can't skim to get the key points. There's no visible information architecture guiding you. It takes a long time to uncover whether watching and listening to something is worth your time. However, there are some amazing insights contained within the millions of podcasts produced each week.

The bigger point is that AI can help you tame information overload. As well as a general summary, you can ask it specific questions – “I’m interested in X. Which bits are most relevant to me?”

Having spent nearly 15 years writing reports despite knowing that 90% of the audience will only skim the headlines, this is a fascinating development. Now, having a library of 200+ page reports might be useful. Not because you’ll read them in full, but because they will create a robust and comprehensive foundation (assuming they’re not full of AI-generated hallucinations!), that you can interrogate at will using AI. Scale this up to an organisation’s knowledge base, and things get really interesting.

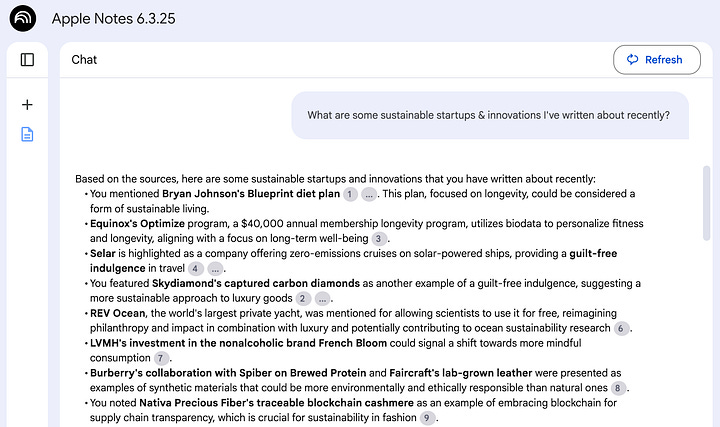

Apple Notes and ChatGPT / NotebookLM and your second brain

I’ve tried countless note taking programs over the years, but after a flurry of enthusiasm, I usually find myself back in Apple Notes after a few months. Recently, I wondered if I might be able get a bit more value from my notes. Thanks to ChatGPT, I was able to learn how to combine all my notes from the last 6 months into a single text file, which I can then query via ChatGPT and / or NotebookLM, asking it specific questions.3

Personally, while I prefer ChatGPT’s insights, NotebookLM’s sourcing is much more precise – you can click directly back to the original text. Both are incredible intellectual sparring partners.

Deep Research and updating The Future Normal

Last, but absolutely by no means least, is Deep Research. As I wrote last week, this was the first tool to give me an existential sense of my impending replaceability.

In typical AI fashion, it’s also transforming my day-to-day in hugely positive ways. We’re in the middle of updating The Future Normal, ahead of its paperback launch. I can now drop each chapter into ChatGPT and ask it to research new developments since we wrote the book. In minutes, it can synthesise insights from 30+ sources, turning a 2-3 day task into a 2-3 hour task.

Why it matters? // Before anyone gets upset – we’re not using AI to write the new sections. Indeed, as I said in my full piece on Deep Research and thinking about thinking with AI, my early experiences showed that these tools work best when you know a lot about the topic that you ask it to research. And in this context:

we still think deeply about where to direct its research. Generic inputs lead to generic outputs;

we’ve also written extensively about these topics ourselves. Both in the book itself, but also in the three years since its publication, we’ve already formulated and shared insights on how these ideas have developed;

we still have to digest and then curate its output. Its ideas aren’t ready to publish. But they’re ready to review and reflect on.

When it comes to the book the words and ideas will remain ours. But we now have a third ‘pre-author’ – AI.

There you have it. Six non-obvious, yet deeply practical tools that are transforming the way I work on a daily basis. As I said at the top, I’d love to extend this piece by crowdsourcing and learning from you:

What’s the most useful, non-obvious AI tool or workflow you used last week?

Share them in the comments, or DM me. I'll send a copy of The Future Normal to any that I feature in next week's edition.

Next: Inspire your team to thrive in the future normal

In the last 12 months I’ve delivered 30+ sessions, both live and virtually – from Brazil to Saudi Arabia, Las Vegas to London.

My regular trend & innovation keynotes bring fresh, cross-industry, people-first perspectives to your audience.

VisuAIse Futures takes it one step further, turning a keynote into an interactive, ‘multiplayer’ creative experience that gets your team excited at how they can use AI to accelerate your innovation culture.

Here’s what people are saying about it:

“It was so refreshing to hear how AI can be used to power human imagination, rather than replace it. And then it was even better to actually experience it”

“Fantastic session! Hugely insightful and fun, too!”

“Brilliant. The feeling in the room was positively intense whilst the images were coming through!

Feel the optimistic vibes it will bring to your event in the 2-minute video below (or watch it here).

If you’d like to discuss bringing me to your next meeting or event then please do reach out directly to Renee Strom or check out my speaking site.

Thanks for reading,

Henry

On that note, if you’re building an AI product then it’s well worth reading this interview with Chris Pedregal, Granola’s founder, where he talks about why its narrow focus, opinionated vision and smaller user base help them deliver such a great user experience.

I guess now is a good place to apologise if you've received a message from me with a nonsensical word slipped in!

While writing this, it occurred to me that it would be great to be able to automatically push all my Granola meeting notes into Apple Notes, as well as the full text of certain ChatGPT answers, so that they’re better integrated into my second brain. That’s next week’s project sorted ;)

Thoroughly enjoyed my very long chat with Maya. As an artist combining AI with my projects, we compiled together highly beneficial steps. Amazing fluidity. Outstanding!