Virtual girlfriends, empathetic chatbots and watermarking AI.

Future Normal: Fast Forward

While there are countless AI news updates (almost too many to keep track of!), there are far fewer deeper reflections on what will these new tools mean for us?

What will it mean when millions of us are socialising with AI bots? How will your job change when an AI can do the bits of it that you’re not good at? And how might AI tools start to restore trust in digital content?

These are not technical questions (indeed the technical questions aren’t super interesting – we know that line heads relentlessly up and to the right), but human questions.

Unfortunately we also know that human questions don’t have easy answers. However, as regular readers know, one of the central ideas at the heart of this newsletter is that we can ask better questions about the future by diving into what’s already happening today. What would it look like if behaviours that are niche today scaled? What if this solution became the default?

With that in mind, here are three recent news stories that will help you understand our Future Normal. Let’s dive in…

Influencer creates AI-version of herself to offer as a $1/minute virtual girlfriend

Future Now // Last week Caryn Marjorie, a 23-year-old influencer with nearly 2 million followers on Snapchat, released CarynAI. The bot has been trained on 2,000 hours of her voice and personality, and ‘talks’ to users via voice messages in a private Telegram chat. Users pay based on the length of Caryn’s response, and the bot made $72,000 in its first week from 1,000 beta tester users.

Future Normal // The sci-fi movie Her brought the idea of AI partners to modern mainstream consciousness way back in 2013. Now, LLMs are opening the floodgates, and Marjorie certainly won’t be the last influencer to ‘outsource’ themselves to an AI. Unsurprisingly, given tech’s long history of early adoption by the adult industry, was the follow up article – Influencer who created AI version of herself says it's gone rogue and she's working 'around the clock' to stop it saying sexually explicit things.

But as this tech becomes democratised and increasingly ‘real’ (Forever Voices, the company behind the bot are working on live voice calls), we need to be talking less about virtual avatars, and more about their users. Today these are a bit of a novelty. But very soon, hundreds of millions of people will spend hours each day with these virtual characters. Virtual companions will be huge shapers of human happiness.

AI ethics is about to get extremely personal.

AI in healthcare: can chatbots show empathy?

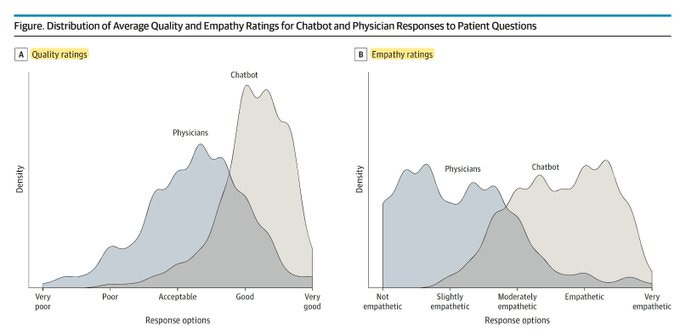

Future Now // A recent study took 195 public healthcare questions from Reddit’s r/AskDocs that had been answered by a verified physician. The questions were fed into ChatGPT, and the answers evaluated by a team of licensed healthcare professionals to assess which was better in terms of ‘the quality of information provided’ and ‘the empathy or bedside manner provided’. The chatbot’s answers were rated as both better quality and significantly more empathic than the physicians’ answers, as shown above.

Future Normal // Many people called out the flaws in this study – small sample size, comparing free answers on a public forum, etc. But they miss the point. AI copilots are coming to every profession, and they will profoundly change how we work. Much of our time at work we are distracted, frustrated, rushing, and doing tasks that are part of our role but aren't exactly where our core strengths lie. AI will be able to handle many of those tasks, as well as changing the profiles of ‘good’ employees.

When it comes to doctors, I suspect that historically we have tolerated doctors who are less empathetic because given a choice between a very caring doctor, or a brilliant and knowledgeable one - you'd choose the brilliant tone deaf one, as they'll keep you alive. Yet as AI democratises access to knowledge and medical insight, this balance will shift – and the brilliant assholes will be replaced by those that are better at delivering bad news gently.

How will your view of a ‘good’ employee profile change in the AI era?

Google goes all in on AI; watermarked AI content.

Future Now // Last week, Google hosted it’s I/O event where it announced its upcoming product releases. As you might imagine, AI was mentioned a few times ;) Amid the flurry of new AI features coming to many of its Workspace tools, there was also a section devoted to its efforts around responsible AI: users will be able to interrogate images to find out when and where else they have appeared online, while its AI-generate images will be watermarked so that they can be identified.

Future Normal // AI will empower more of us to create more things, from images to movies and music. Yet for every artist exploring new frontiers (or worker pulling together a PowerPoint deck), there will be others who want to use these tools for more nefarious purposes, from political misinformation to non-consensual deepfake porn. Just last week Vice reported that a scammer made thousands of dollars selling ‘leaked’ Frank Ocean tracks to members of a fan community – they turned out to be AI-generated fakes.

We’re coming to the end of the AI honeymoon phase. Calls for regulation will only get louder, and so it’s not surprising that AI companies (especially incumbents like Google) will increasingly focus on their trustworthiness – “Bold and Responsible AI” was Google’s slogan at I/O. Ever since the birth of modern finance, trust has been the invisible ingredient driving social and economic prosperity. We live in a world awash in media. Ensuring we can trust what we see and hear will be one of the biggest challenges – and opportunities – in the near future.

What Is The Future Normal For Your Business?

My new book, The Future Normal: How We Will Live, Work & Thrive In The Next Decade, explores 30 trends, from continuous glucose monitoring to job sharing.

I also give inspiring, actionable presentations that help your team spot and seize emerging opportunities.

Get in touch if you'd like to discuss an upcoming event or project.

Or, sit back and enjoy the keynote that myself & Rohit Bhargava gave at SXSW to launch the book:

Thanks for reading,